You’re being watched

Implications of AI in decision-making become pressing when we reflect on advancements in this field

To what extent should we allow AI to make decisions for us? This is not a hypothetical question but one that demands a concrete answer as artificial intelligence increasingly integrates into crucial aspects of governance, security, and surveillance. The implications of AI in decision-making become even more pressing when we reflect on recent unthinkable advancements in this field. Yuval Noah Harari, in his latest book ‘Nexus: A Brief History of Information Networks from the Stone Age to AI’, highlights a chilling example. He explains how the US National Security Agency (NSA) used an AI system called Skynet to analyse electronic communications, travel patterns, social media posts, and other metadata, placing people on a suspected terrorists list. According to Harari, this same system is employed for mass surveillance in Pakistan, combing through the metadata of 55 million mobile users. The idea that an algorithm could assess a person’s likelihood of becoming a terrorist is terrifyingly efficient – but is it trustworthy?

The answer, unfortunately, points towards caution. AI systems, despite their promise, have repeatedly shown themselves prone to errors – often with disastrous consequences. Pakistan, it seems, is not exempt from such risks. If AI tools like Skynet are being used to monitor citizens, where is the accountability for mistakes? Do we have any data to demonstrate the accuracy of these tools? Or are we simply trusting them, hoping that the system is fair? AI models can only be as reliable as the data they are trained on, and as impartial as the trainers who build them. But what happens when that data is biased or when human prejudice filters into the training process? Without transparency on these fronts, we are left with AI systems that reflect and even amplify existing biases, potentially labeling innocents as terrorists. Another worrying factor is the complexity of terrorism charges, which are rarely accompanied by the option of bail. When AI decisions lead to the wrongful accusation of individuals, they may be locked into a system that offers little recourse. This is especially alarming in a country like Pakistan, where digital illiteracy is rampant, and many are unaware of how their social media activities might be interpreted. An offhand comment, an innocent post, or even a pattern of communication could be enough for AI to flag someone as suspicious.

This brings us to the issue of misplaced priorities. It seems that authorities, rather than addressing the root causes of discontent – like youth alienation – are more focused on what is being said on social media. Young people are increasingly polarised, with few outlets to engage in meaningful activities or discussions. Instead of investing in creating ‘third places’, spaces where people can meet and cooperate constructively, authorities have defaulted to surveillance. But monitoring a generation that has little to do except express frustration online is not the answer. Rather than relying on AI to police thought and expression, why not invest in opportunities for constructive engagement? The youth should be encouraged to engage in discussions that lead to problem-solving, not repression. In the meantime, their online activities – most of which are far less complex than AI might interpret – should be viewed with greater nuance.

-

Kash Patel Fires FBI Officials Behind Trump Mar-a-Lago Documents Probe, Reports Say

Kash Patel Fires FBI Officials Behind Trump Mar-a-Lago Documents Probe, Reports Say -

Martin Short's Daughter Katherine's Death Takes Shocking Turn As Terrific Details Emerge

Martin Short's Daughter Katherine's Death Takes Shocking Turn As Terrific Details Emerge -

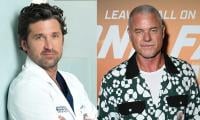

Patrick Dempsey Reacts To Tragic Death Of His 'Grey's Anatomy' Co-star Eric Dane

Patrick Dempsey Reacts To Tragic Death Of His 'Grey's Anatomy' Co-star Eric Dane -

Sidney Crosby Injury News Shakes Penguins After Olympic Tournament

Sidney Crosby Injury News Shakes Penguins After Olympic Tournament -

Yankees Honour CC Sabathia With No. 52 Retirement This September

Yankees Honour CC Sabathia With No. 52 Retirement This September -

Cuban Government Says Boat Full Of Armed Men Fired On Border Guards, Killing 4

Cuban Government Says Boat Full Of Armed Men Fired On Border Guards, Killing 4 -

Lily Collins Faces Intense Pressure After Landing Audrey Hepburn Role: Source

Lily Collins Faces Intense Pressure After Landing Audrey Hepburn Role: Source -

FIFA World Cup Security Concerns Spike After Recent Cartel Violence In Mexico

FIFA World Cup Security Concerns Spike After Recent Cartel Violence In Mexico -

Shamed Andrew Ordered To Curb Hobby: ‘It’s A Bad Look’

Shamed Andrew Ordered To Curb Hobby: ‘It’s A Bad Look’ -

Cardi B 'no-nonsense' Move: Why She Distanced Herself From Stefon Diggs? Source

Cardi B 'no-nonsense' Move: Why She Distanced Herself From Stefon Diggs? Source -

Metallica Announce 2026 ‘Life Burns Faster’ Las Vegas Sphere Residency

Metallica Announce 2026 ‘Life Burns Faster’ Las Vegas Sphere Residency -

‘From Dating Scams To Fake Lawyers’: OpenAI Bans ChatGPT Accounts Over Misuse

‘From Dating Scams To Fake Lawyers’: OpenAI Bans ChatGPT Accounts Over Misuse -

Amy Schumer Reveals She Pushed Through Illness Mid-performance: 'Proud I Made It'

Amy Schumer Reveals She Pushed Through Illness Mid-performance: 'Proud I Made It' -

Shamed Andrew Can No Longer Take The ‘heat’ Of His Actions

Shamed Andrew Can No Longer Take The ‘heat’ Of His Actions -

Royals Adamant To Show Andrew Is ‘just One Bad Apple’

Royals Adamant To Show Andrew Is ‘just One Bad Apple’ -

Jessie Buckley Reveals Why BAFTA Win Felt Extra 'special' With Cillian Murphy

Jessie Buckley Reveals Why BAFTA Win Felt Extra 'special' With Cillian Murphy