TikTok announces community guidelines in Urdu in bid to address Pakistan's concerns

Pakistan is one of the five markets with the largest volume of removed TikTok videos in content moderation process

KARACHI: TikTok has updated its community guidelines to make them available in Urdu for its fans in Pakistan, the company said Thursday, just two weeks after representatives of the Chinese-owned video-sharing app and Pakistan Telecommunication Authority (PTA) had a back-and-forth over "obscene" content.

The PTA had earlier issued a final warning to the social media firm on July 21 and asked it "to moderate the socialisation and content within legal and moral limits, in accordance with the laws of the country".

In a press release today, it said providing the community guidelines in Urdu was aimed at helping "maintain a supportive and welcoming environment for its Pakistan users" in a bid to offer more space for fun and creative expression as it becomes increasingly popular in the country.

"Addressing this, TikTok has released an updated publication of the Community Guidelines in Urdu that will help and maintain a supportive and welcoming environment on TikTok for users in Pakistan," the company said.

It underscored that the community guidelines provide its guidance on "what is and what is not allowed on the platform, keeping TikTok a safe place for creativity and joy, and are localized and implemented in accordance with local laws and norms".

"TikTok’s teams remove content that violates the Community Guidelines, and suspends or bans accounts involved in severe or repeated violations.

"Content moderation is performed by deploying a combination of policies, technologies, and moderation strategies to detect and review problematic content, accounts, and implement appropriate penalties," it added.

Pakistan one of top markets with most removed videos

The Chinese app also pointed out that Pakistan, according to its latest transparency report, was "one of the top 5 markets with the largest volume of removed videos on TikTok for violating community guidelines or terms of service".

"This demonstrates TikTok’s commitment to remove any potentially harmful or inappropriate content reported in Pakistan."

TikTok said its systems were set up in such a way that they could automatically flag certain types of content that violated the community guidelines, "enabling it to take swift action and reduce potential harm".

"These systems take into account things like patterns or behavioural signals to flag potentially violative content," it added.

Content moderation

Citing a lack of advancement in technology to enforce its policies, it underlined that "context can be important when determining whether certain content, like satire, is violative".

"In some cases, this team removes evolving or trending violative content, such as dangerous challenges or harmful misinformation," it said.

The app mentioned that content moderation was also based on reports from TikTok users who employed the in-app reporting feature "to flag potentially inappropriate content or accounts to TikTok".

-

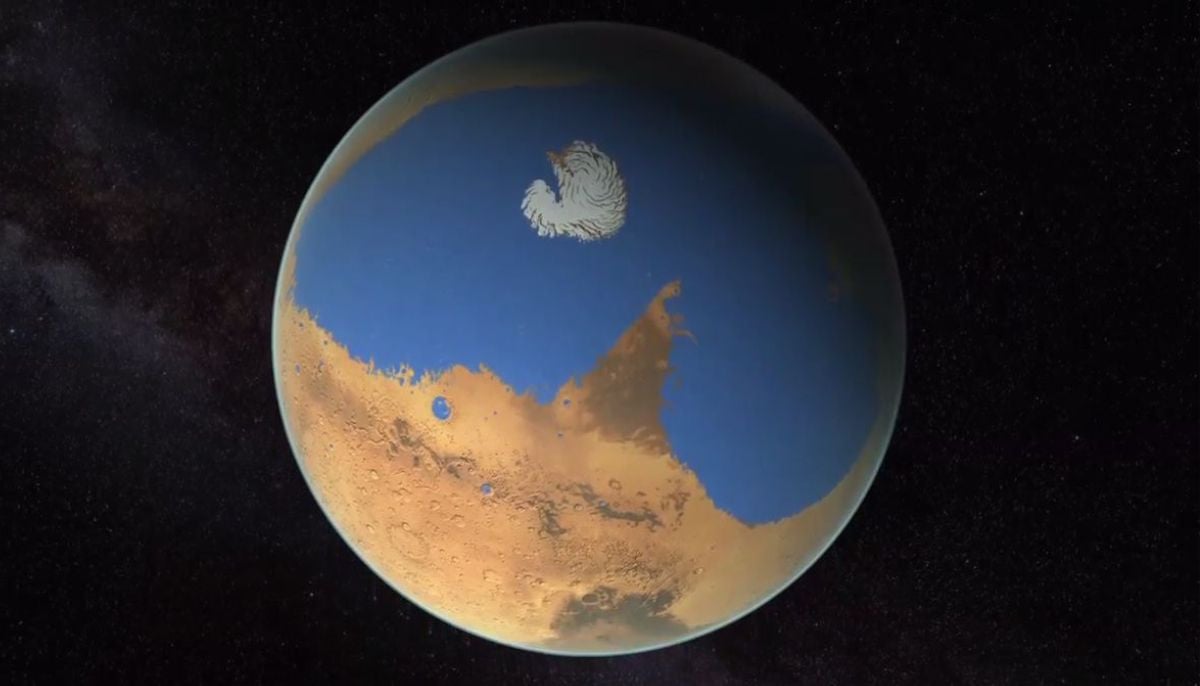

Total Lunar eclipse: What you need to know and where to watch

-

Sun appears spotless for first time in four years, scientists report

-

SpaceX launches another batch of satellites from Cape Canaveral during late-night mission on Saturday

-

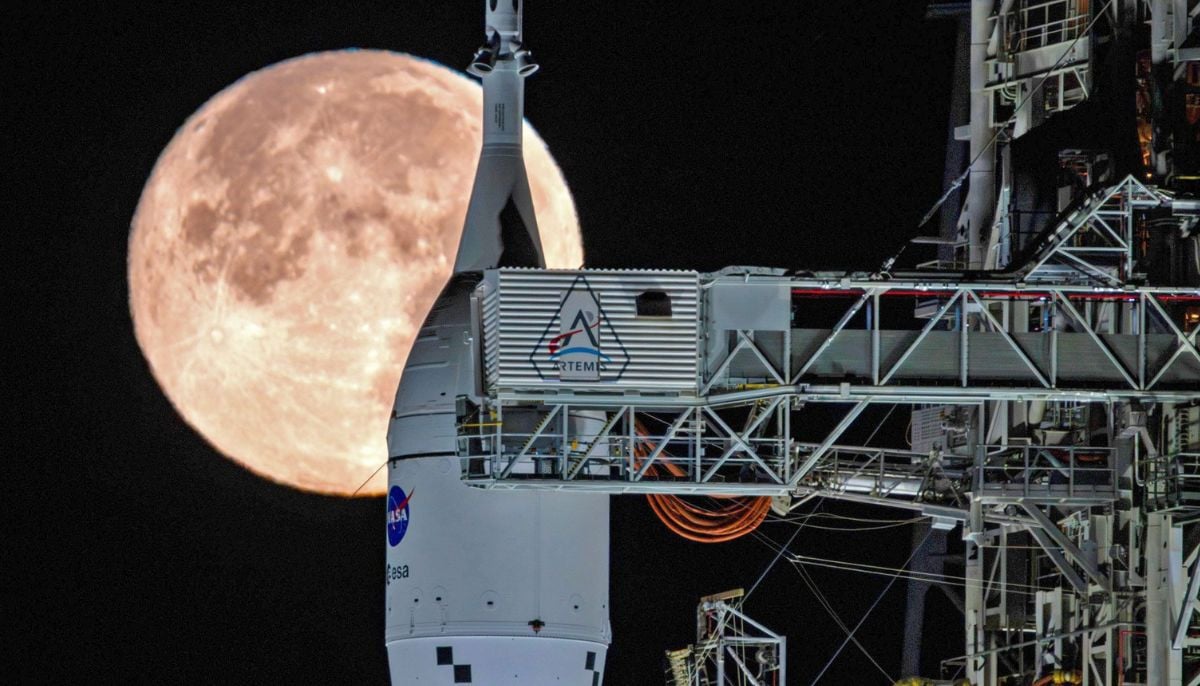

NASA targets March 6 for launch of crewed mission around moon following successful rocket fueling test

-

Greenland ice sheet acts like ‘churning molten rock,’ scientists find

-

Space-based solar power could push the world beyond net zero: Here’s how

-

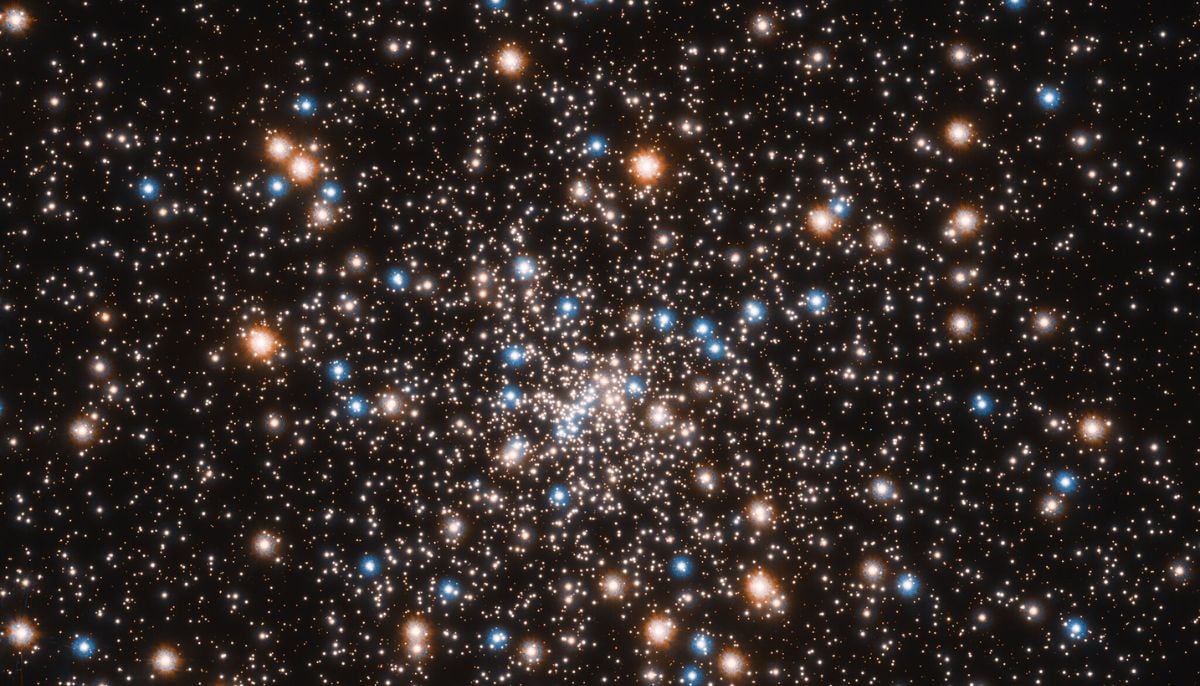

Hidden ‘dark galaxy' traced by ancient star clusters could rewrite the cosmic galaxy count

-

Astronauts face life threatening risk on Boeing Starliner, NASA says