Meta's move against AI deepfakes: Oversight Board steps up to protect users

Meta board is requesting public comments about AI deepfake pornography

Meta's Oversight Board is going to review two cases about how Facebook and Instagram dealt with the content containing artificial intelligence- (AI) generated nude images of two famous women, as per an announcement by the board Tuesday.

The board is requesting public comments about concerns around AI deepfake pornography as part of its review of the cases, according to The Hill.

One case is based on the concerns relating to an AI-generated nude image made to look like an American public figure.

The image was removed automatically by Facebook after being identified by a previous poster as violating Meta’s bullying and harassment policies.

Parallel to this, the other case is around an AI-generated nude image made to resemble a public figure from India, which Instagram did not initially remove after it was reported.

After the board selected the case and Meta determined the content was left up "in error," the image was later removed, according to the board.

"In order to prevent further harm or risk of gender-based harassment, the board is not naming the involved individuals," a spokesperson for the Oversight Board said.

The board, which is funded by a grant provided by the company and is run independently from Meta, can issue a binding decision about the content.

However, policy recommendations are non-binding and Meta has final say about what it chooses to implement.

Comments that address strategies for how Meta can address deepfake porn are being sought by the board.

They are also focusing on the challenges of relying on automated systems that can close appeals in 48 hours if no review has taken place.

-

Shanghai Fusion ‘Artificial Sun’ achieves groundbreaking results with plasma control record

-

Polar vortex ‘exceptional’ disruption: Rare shift signals extreme February winter

-

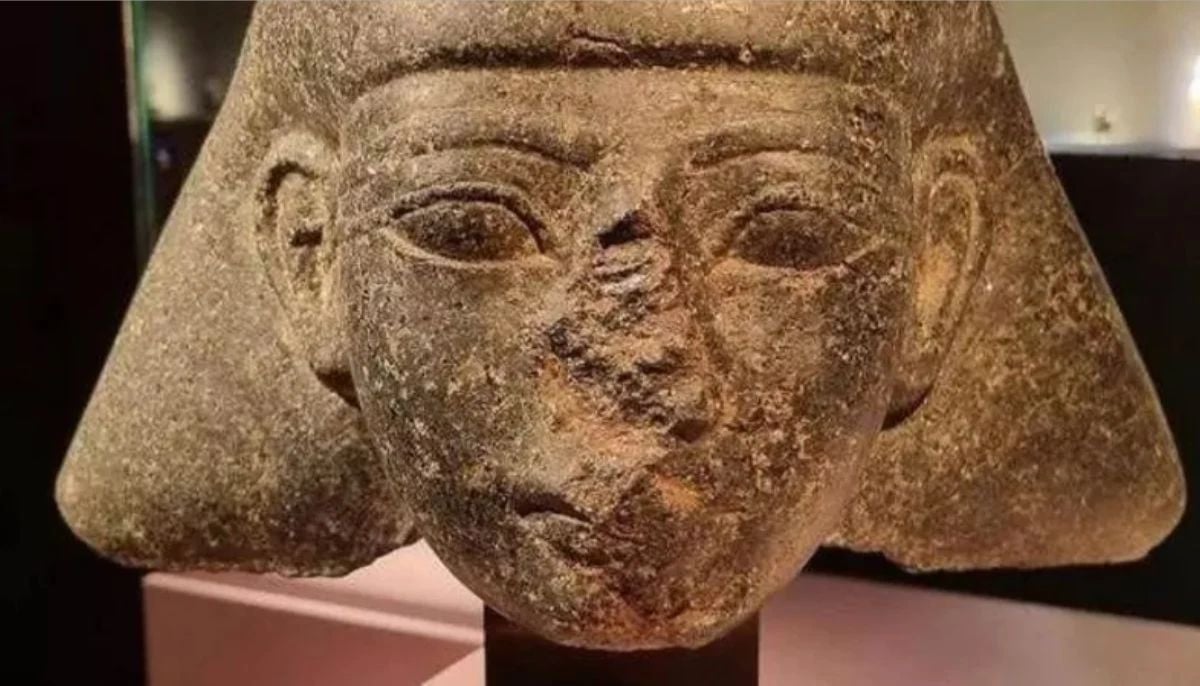

Netherlands repatriates 3500-year-old Egyptian sculpture looted during Arab Spring

-

Archaeologists recreate 3,500-year-old Egyptian perfumes for modern museums

-

Smartphones in orbit? NASA’s Crew-12 and Artemis II missions to use latest mobile tech

-

Rare deep-sea discovery: ‘School bus-size’ phantom jellyfish spotted in Argentina

-

NASA eyes March moon mission launch following test run setbacks

-

February offers 8 must-see sky events including rare eclipse and planet parade