Video game technology allows paralysed woman to speak

This is the same software used to drive facial animation in games, turning brain waves into a talking digital avatar

Experts believe they have sought a breakthrough by enabling a paralysed woman to speak again using a technology often employed in video games, as she was left paralysed after suffering a stroke.

According to the researchers at UC San Francisco (UCSF) and UC Berkeley, they created the world's first brain-computer interface to produce electronic speech and facial expression from brain signals, according to an Independent report.

This will open another door to helping those who have lost their natural way of communicating. This is the same software used to drive facial animation in games, turning brain waves into a talking digital avatar.

The researchers decoded signals into three forms of communication: text, synthetic voice, and facial animation on a digital avatar, including lip sync and emotional expressions.

Researchers maintained that it was for the first time that facial animation was synthesised from brain waves.

Chairman of neurological surgery at UCSF, Edward Chang said: "Our goal is to restore a full, embodied way of communicating, which is really the most natural way for us to talk with others. These advancements bring us much closer to making this a real patient solution."

The signals that would have made their way to her muscles in her tongue, jaw, voice box, and face were intercepted. These wires were then connected to computers powered by artificial intelligence (AI) which could train and analyse brain activity in the computer.

She eventually talked and wrote based on recordings of from her wedding before she was paralysed.

Michael Berger, the CTO and co-founder of Speech Graphics company said: "Creating a digital avatar that can speak, emote and articulate in real-time, connected directly to the subject's brain, shows the potential for AI-driven faces well beyond video games."

"When we speak, it's a complex combination of audio and visual cues that helps us express how we feel and what we have to say," he added.

"Restoring voice alone is impressive, but facial communication is so intrinsic to being human, and it restores a sense of embodiment and control to the patient who has lost that," while mentioning "I hope that the work we've done in conjunction with Professor Chang can go on to help many more people."

The study published in the journal Nature stated that the woman could also cause the avatar to express specific emotions and move individual muscles.

Kaylo Littlejohn, a graduate student working with Dr Chang said: "We’re making up for the connections between the brain and vocal tract that have been severed by the stroke. When the subject first used this system to speak and move the avatar’s face in tandem, I knew that this was going to be something that would have a real impact."

Lead author Frank Willett, said: "This is a scientific proof of concept, not an actual device people can use in everyday life. But it's a big advance toward restoring rapid communication to people with paralysis who can't speak."

-

SpaceX pivots from Mars plans to prioritize 2027 Moon landing

-

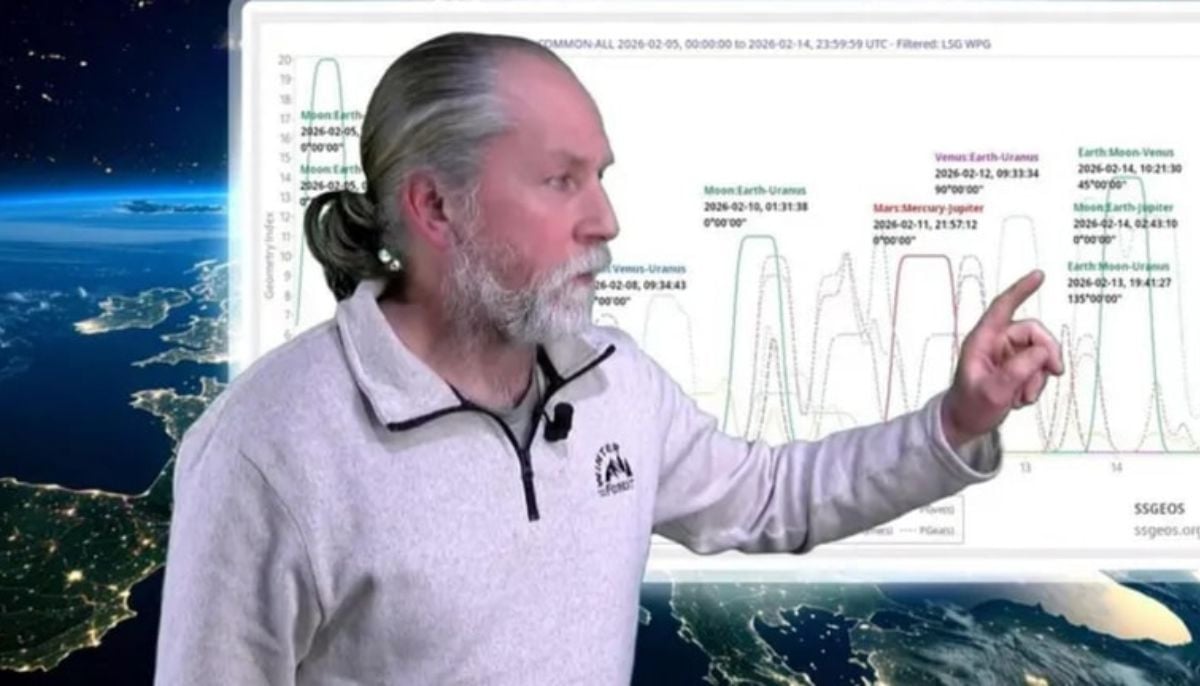

Dutch seismologist hints at 'surprise’ quake in coming days

-

SpaceX cleared for NASA Crew-12 launch after Falcon 9 review

-

Is dark matter real? New theory proposes it could be gravity behaving strangely

-

Shanghai Fusion ‘Artificial Sun’ achieves groundbreaking results with plasma control record

-

Polar vortex ‘exceptional’ disruption: Rare shift signals extreme February winter

-

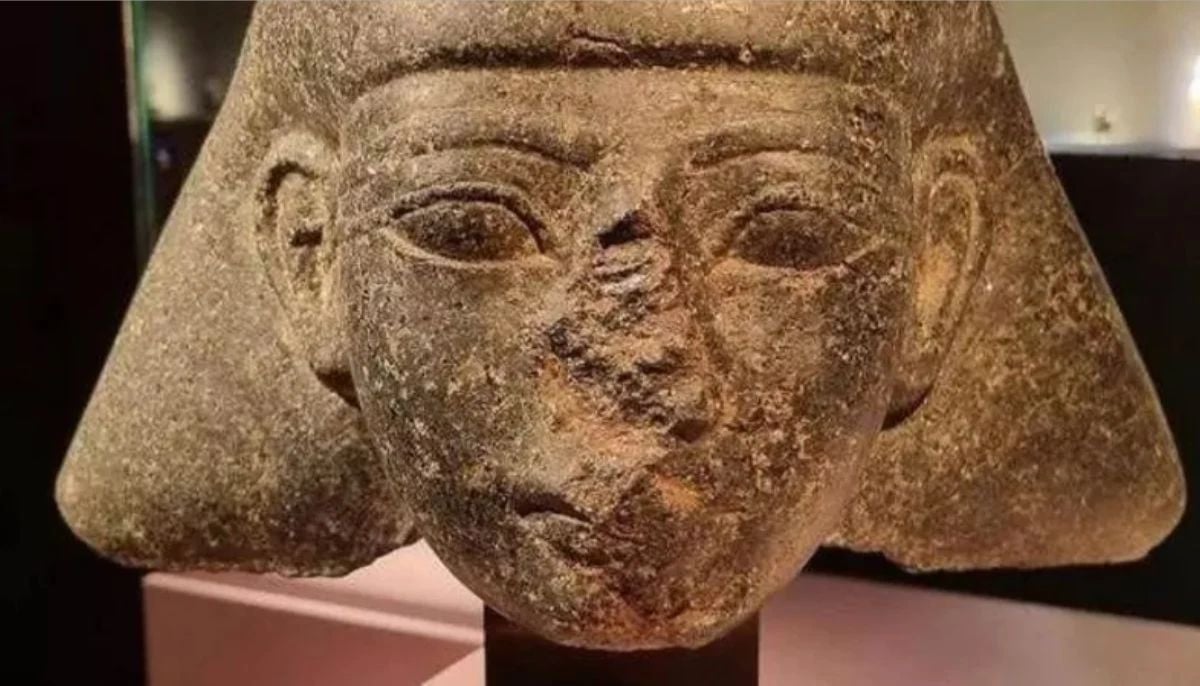

Netherlands repatriates 3500-year-old Egyptian sculpture looted during Arab Spring

-

Archaeologists recreate 3,500-year-old Egyptian perfumes for modern museums