Elon Musk, other tech giants call for pause in AI experiments

"AI systems with human-competitive intelligence can pose profound risks to society and humanity," says open letter

Elon Musk, a billionaire businessman, and a group of other tech giants have called for a halt to the creation of potent artificial intelligence (AI) systems on Wednesday to give researchers time to ensure their security.

In an open letter urging AI laboratories to review massive AI systems, hundreds of eminent artificial intelligence (AI) researchers, have raised concerns about the "profound risks" that these machines represent to society and mankind.

According to the letter, published by the nonprofit Future of Life Institute, AI labs are currently locked in an “out-of-control race” to develop and deploy machine learning systems “that no one — not even their creators — can understand, predict, or reliably control.”

"AI systems with human-competitive intelligence can pose profound risks to society and humanity," said the open letter.

"Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable."

The goal of AI engineers everywhere is to ensure that these potent AI systems are given the time and space they need for researchers to verify their safety.

Author Yuval Noah Harari, Apple co-founder Steve Wozniak, Skype co-founder Jaan Tallinn, legislator Andrew Yang, and several prominent AI academics and CEOs, including Stuart Russell, Yoshua Bengio, Gary Marcus, and Emad Mostaque, are among those who have signed the petition.

The release of GPT-4 by the San Francisco company OpenAI served as the primary impetus for the letter.

The company says its latest model is much more powerful than the previous version, which was used to power ChatGPT, a bot capable of generating tracts of text from the briefest of prompts.

“Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4,” says the letter. “This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.”

Musk was an initial investor in OpenAI, spent years on its board, and his car firm Tesla develops AI systems to help power its self-driving technology, among other applications.

The letter, hosted by the Musk-funded Future of Life Institute, was signed by prominent critics as well as competitors of OpenAI like Stability AI chief Emad Mostaque.

The letter quoted from a blog written by OpenAI founder Sam Altman, who suggested that "at some point, it may be important to get an independent review before starting to train future systems".

"We agree. That point is now," the authors of the open letter wrote.

"Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4."

They called for governments to step in and impose a moratorium if companies failed to agree.

The six months should be used to develop safety protocols, AI governance systems, and refocus research on ensuring AI systems are more accurate, safe, "trustworthy and loyal".

The letter did not detail the dangers revealed by GPT-4.

-

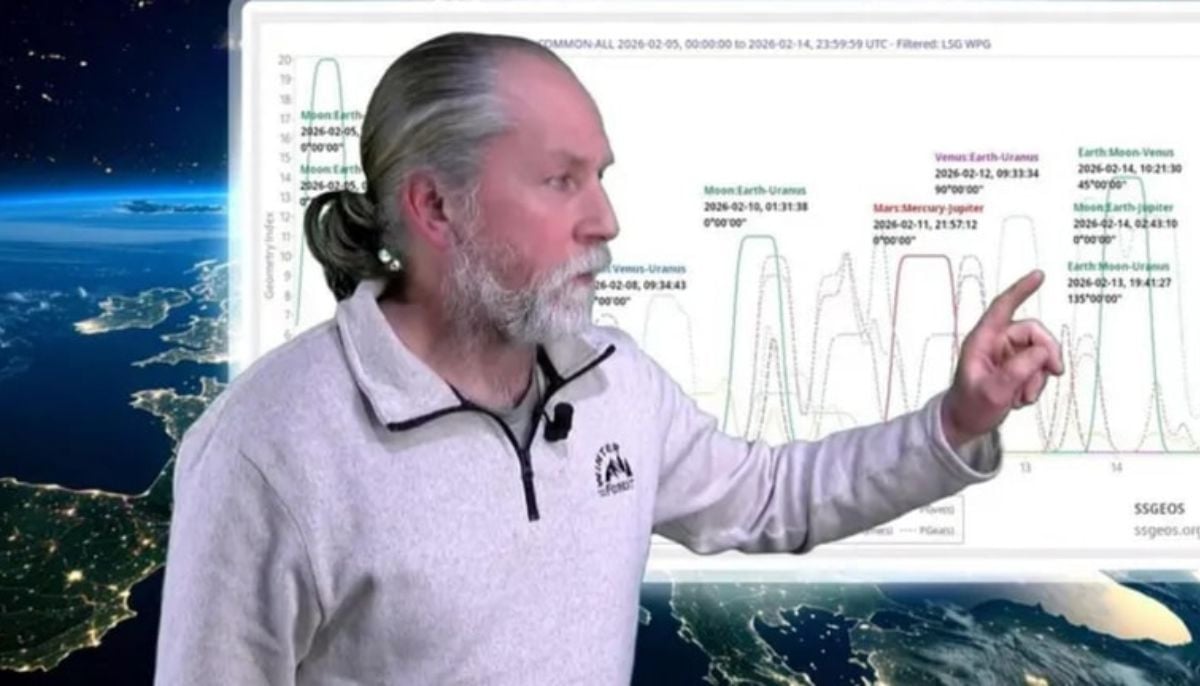

Dutch seismologist hints at 'surprise’ quake in coming days

-

SpaceX cleared for NASA Crew-12 launch after Falcon 9 review

-

Is dark matter real? New theory proposes it could be gravity behaving strangely

-

Shanghai Fusion ‘Artificial Sun’ achieves groundbreaking results with plasma control record

-

Polar vortex ‘exceptional’ disruption: Rare shift signals extreme February winter

-

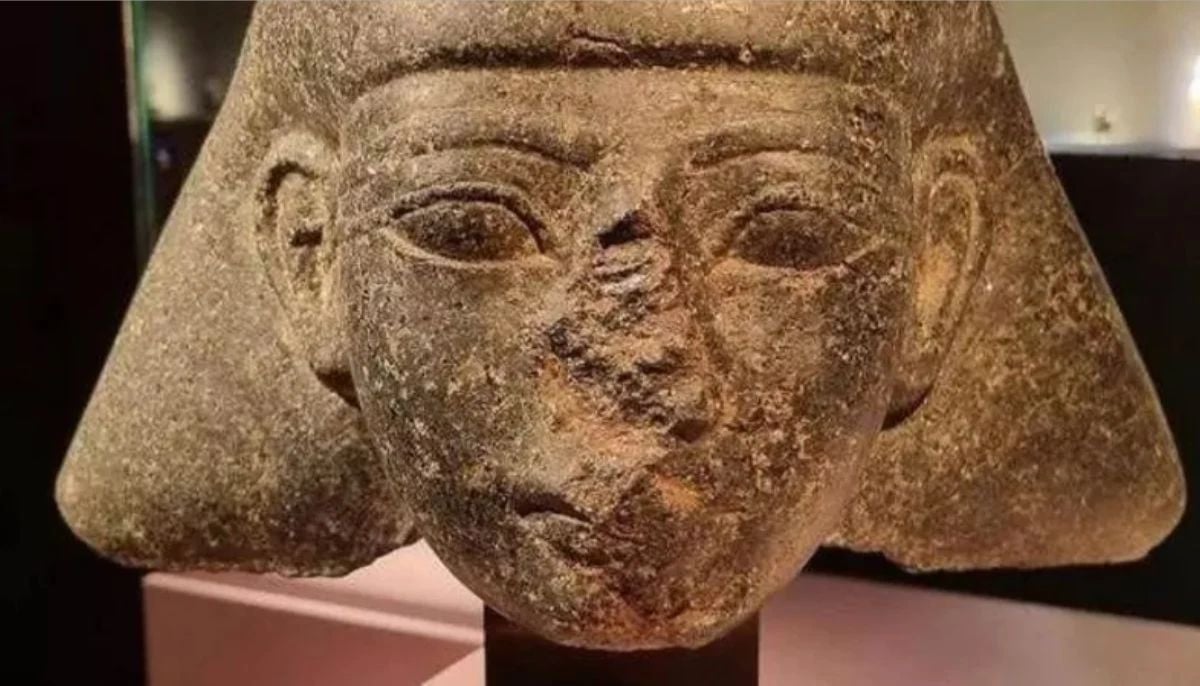

Netherlands repatriates 3500-year-old Egyptian sculpture looted during Arab Spring

-

Archaeologists recreate 3,500-year-old Egyptian perfumes for modern museums

-

Smartphones in orbit? NASA’s Crew-12 and Artemis II missions to use latest mobile tech